This my own experience of the Interactive Prototype 1 that I want to share with you all.

1. Structure the code.

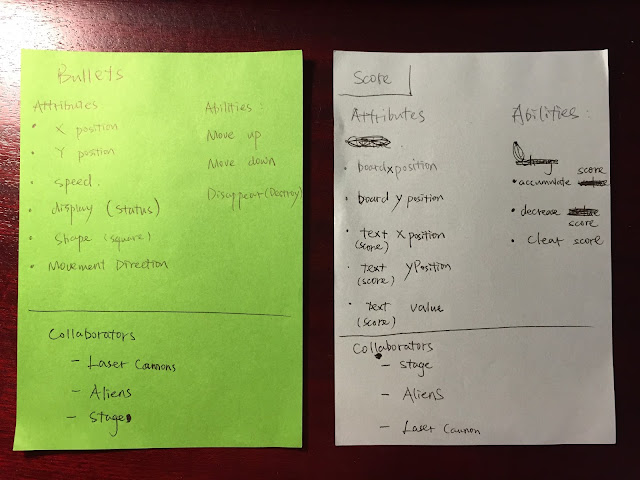

Before actually writing the code, we did an in-class exercise to think about the Classes, their Attributes (Variables) and Abilities (Functions) of our games or wearables, which was a key step to have a clearer idea of how to implement our concepts. Also, I found the learning materials, such as the pong game, are quite helpful for us to quickly familiar with AS3. I highly recommend you to study the code of the examples and figure out why the author structure the code like this.

2. Debug

The Photoshop Flash is not a good development environment as debugging is too hard and there are no detailed logs for the error. The most effective way I learned is to use trace statement to check if the code is executed to a specific line.

3. Fixing bugs

I implemented the majority of the functions but couldn't fix a bug of 'random shooting' feature. Everything seemed alright according to the code's logic. I spent a whole evening to discover why it didn't work and found the problem was caused by the 'Timer Event', but I still had no idea of how to fix it. The bug was solved by Peter eventually, he got rid of one 'Timer Event' (there were two initially) and it worked.

Besides, although I referred an example, it still took me about two days to add the 'restart' function for another game. As AS3 is just like many other programming languages such as C/C++ that have the main function, the sub-functions could not call the main function to loop the game, I had to write another two functions calling each other, using the main function as an entry. Once the game started, it'll execute the main function, then loop between the two functions and never break.

2015年9月26日星期六

WK9_Exercise_Experience Prototype

Restaurant Dining Experience for Customers

What is the existing experience?

- ordering food

- booking seat/ reservation

- find dining

- parking

- facility use: WiFi, add water, air-conditioning, waiting for the seat.

- pay, check the bill

What external/ internal factors impact on the experience?

1. external:

- decoration

- location

- car park

- views/surroundings/noises

- entertainment

- temperature

- food (flavor, time)

- price

- service

2. internal:

- mood

- personality

- gender

- age

- occupation

What aspects of the existing experience could be enhanced/ augmented/ supported with technology?

- App for finding car parks.

- use a mobile app to increase the efficiency to place an order

- a digital menu that shows the ingredients and energy of the dishes. It also can calculate the total energy of the food.

- provide an automatic pay machine on each table.

How would introducing technology into this context change the experience?

The new technology could facilitate the customer by promoting the service efficiency, such as the app for finding car parks or the automatic pay service. On the other hand, some technologies would create a better user experience, such as the digital menu that shows the ingredients and energy of the dishes.

What experience scenarios might you test with the technology?

The digital menu, for example, I'll ask a young female customer to order food and provide both the paper menu and the digital menu to her. Observe what menu she chooses, how she uses the menu and orders the food.

Evaluation of the Interactive Prototype 1

Outcome and Reflection

Before doing the survey, I briefly introduced the concept of the game to the user and then asked them to play the game. Besides, I did not tell them how to interact with the game at the beginning, some users thus were struggling with shooting the bullets. And not until then did I tell them how to shoot the enemy.

The outcome of the user testing and the reflection on each specific question are listed below.

game than the video prototype. Only two users felt confused about how to play the game and they both wanted some hints to guide the user.

Every user completely understood how to interact with the game and I was happy to see that. One user had no idea how to win the game, which had no relevance with the question but still worth consideration. I think I'll pop up a winning message after the player destroys all the aliens to tackle this problem.

From the pie chart, we can see that more than half of the users thought the attractiveness of the interactions of the game is just around an average grade rather than a full grade, some among those gave me very good suggestions.

- add a timer to count the game time

- add background music

- add a death state to the player

- create difficulty modes

According to the plan, the background music and 'death state' features are what I'm going to incorporate in this game at the refinement stage. The timer idea was good, which might increase the tension of a game to make it more exciting. The difficulty modes are also feasible and suggestive, I just need to develop varies types of enemies with different speed and firepower. This will also improve the user experience because the user will be given options to play the difficulty mode they want.

Problems

- bullet speed is a bit slow compared to the aliens' speed.

- display instructions when destroy all the enemies.

- the player can die.

These problems are completely the same as the problems mentioned in the last section. I won't expand them again.

Concerns of changing the interaction from keyboard input to physical input.

- use the characters instead of numbers

- provide a 'random' instruction on the screen that can be easily perceived even when the player is moving his body.

The second suggestion was really good because based on my observation, even after I told the user

how to shoot, some of them still having trouble pressing the right key. The reason might be that the key code is displayed at the bottom-right corner of the stage and not too big or bright to perceive. I would reconsider where and how to show the random key code in the future game design.

The overall impression of the prototype was good, and most users thought the prototype had grasped the essence of the game concept. However, most of the users thought the prototype is at a medium complexity. I've asked Peter if I need to add more features at this stage because I also thought it was simple, but he told me the complexity was enough for this assignment. Thus, I decided to ignore this feedback.

4 out of 5 testers who had answered the last question misunderstood the question. What I wanted to get was their own experience of building this prototype rather than the suggestions for my prototype. Only one guy said he knew my pain, I think I know who he is.

Effectiveness

The prototype and the testing session are both effective. Although it is a brief version of the final game, it has already covered all the key interactions of the game. The testing session also helped me get some useful feedback that can be applied in the future game such as the timer, difficulty modes, etc. Besides, the observation of how they played the game, how they strugglled with shooting, and other phenomena while they playing the game also gave me a glimpse of the game. For instance, the layout made it difficult for half of the users to perceive the random key code and connect it to 'shooting' operation even after I pointed out there were connections between different elements on the screen.

The testing protocol is more detailed than the last one. I designed 6 groups of questions for this testing session. Based on the reflection on the last testing session, I put more qualitative questions in the survey this time in order to get more constructive feedback. And for this reason, I told the user to skip the questions if they had no clear answers to them to ensure the accuracy of the feedback.

Constraints

The unpolished prototype just implemented part of the functions that covered the key interactions. The testers thus might not have a comprehensive understanding of the game and what will it look like in the future. Fortunately, the game itself was intuitive so there was barely no constraint for the testers to understand or play the game.

Besides, as the survey contains so many questions, and most of which are text questions that need to do more thinking and brainstorming, many testers thus seemed a bit tired of the survey and skipped many questions. You can see from above that the average number of comments for each text question were around 5, which is just half of the testers who did the survey.

Implications

For future projects, I'll add some of the features such as 'audio', 'game restart', 'timer', 'difficulty mode', 'player status', 'real character icon', and 'winning message' to refine it and make it more fun and exciting. Moreover, in order to improve the user experience, the layout, color scheme, and the random key display also need modification.

For future testing sessions, I'll balance the number of the qualitative and quantitative questions. Try not to make it too simple or too boring. Also, I need to reduce the total number of the questions and focus on the key aspects that are supposed to be tested in the current stage. For instance, this interactive prototype 1 is more focusd on the interactions of the game, so the questions for the testing session should be also designed to get the feedback which is related to the interactions.

WK8_exercise_Innovative Physical Interactions

We were asked to come up with at least 5 different physical interactions (focus on tangible and/or embodied) for Email, Twitter, and Super Mario Bros.

Email:

- a steering wheel. Turn it to select emails and honk to check the detailed content.

- a card case with business cards. Remove a card from the card case to delete an Email.

- a switch. Switch it on to mark an Email as unread while switch it off to mark an Email as read.

- a flag shape on a board. Press the shape to flag or unflag an Email.

- a real mailbox. Open the mailbox to enter the inbox and close to go back to the default page.

Twitter:

- a bird to read tweets and post your tweet by speaking to it. A sensor will be embedded to interpret the sound to text.

- a valve. Spin to retweet.

- a metal letter board to type in the tweets.

- piano keys. Press white key to comment, black key to retweet.

- a pen and a paper. Write on the paper and it'll automatically convert to digital text on the screen.

Super Mario Bros:

- a seesaw to control the moving direction and the jumping of Mario.

- two drumsticks and a drum. Beat the drum by a single drumstick to move forward or backward, beat the drum by both drumsticks to jump, and hit the two drumsticks to shoot.

- a scale. Put the weights on one side to control the moving direction

- a gun to control the shooting.

- a wire coil on a metal tube. Spin the coil clockwise or counterclockwise to control the moving direction. Move the coil up or down to jump.

As my game mashup idea also has the features of moving and shooting which are similar to the Super Mario Bros. I'd like to apply the drumstick idea to the Makey-Makey project.

Email:

- a steering wheel. Turn it to select emails and honk to check the detailed content.

- a card case with business cards. Remove a card from the card case to delete an Email.

- a switch. Switch it on to mark an Email as unread while switch it off to mark an Email as read.

- a flag shape on a board. Press the shape to flag or unflag an Email.

- a real mailbox. Open the mailbox to enter the inbox and close to go back to the default page.

Twitter:

- a bird to read tweets and post your tweet by speaking to it. A sensor will be embedded to interpret the sound to text.

- a valve. Spin to retweet.

- a metal letter board to type in the tweets.

- piano keys. Press white key to comment, black key to retweet.

- a pen and a paper. Write on the paper and it'll automatically convert to digital text on the screen.

Super Mario Bros:

- a seesaw to control the moving direction and the jumping of Mario.

- two drumsticks and a drum. Beat the drum by a single drumstick to move forward or backward, beat the drum by both drumsticks to jump, and hit the two drumsticks to shoot.

- a scale. Put the weights on one side to control the moving direction

- a gun to control the shooting.

- a wire coil on a metal tube. Spin the coil clockwise or counterclockwise to control the moving direction. Move the coil up or down to jump.

As my game mashup idea also has the features of moving and shooting which are similar to the Super Mario Bros. I'd like to apply the drumstick idea to the Makey-Makey project.

Reflection of the survey design of video prototype

I asked 8 questions in total for the video prototype testing session. 5 of them are quantitative questions with concrete scores to measure the aspects that I focused on while the rest 3 questions are qualitative questions asked to get in-depth suggestions for the video prototype and the whole concept. These questions are listed below.

1.Can you understand the basic rules of the game? (1-5) ----------------- Multiple Choice

2.Do I communicate the design rationale clear enough? (1-5) ----------------- Multiple Choice

3.Do you think it's an interesting game? (1-5) ----------------- Multiple Choice

4.Why or why is it not interesting? ---------------- Text Question

5.Can you foresee any problems of the game? (e.g. rules, interactions, etc) ---------------- Text Question

6.Do you have any suggestions to refine the game to address the potential problems that you concern? ---------------- Text Question

7.What do you think of the quality of the video? (1-5) ----------------- Multiple Choice

8.What do you think of the quality of the audio? (1-5) ----------------- Multiple Choice

The type of study we did on the class was A/B testing and survey, but, unfortunately, I missed the A/B testing part. Therefore, what I really did was just an online survey by inviting the class to watch my video.

The purpose of the question 1 was the clarity of the game rules. It's pretty straightforward to get the feedback of whether the basic rules are communicated well and understood by the audience. From the feedback, I'll have a general idea of whether the game rules was clear or not.

The purpose of the question 2 was the clarity of the design rationale of the concept, which was very similar to the first one.

The 3rd question aimed to know if it was a fun game. It was one of the most important questions in this survey because it could significantly affect the iteration in the future. I also used a multiple choice to get an average score of the interestingness of the game so that I'll have a rough idea of the current fun level of the game. Besides, I didn't define 'fun' in the question, it should be broken down to several more specific questions that cover all the aspects of the definition of 'fun'.

The 7th and the last questions were asked to get rough scores of the quality of the audio and video of the prototype. I made the two questions hastily and forgot to put a text comment option under each question. What I found was that just scores, without comments, were nearly pointless of helping me improve the video-making skills, it could only be an encouragement or a pain.

All these 5 quantitative questions had the same problem that the options are too vague. I could have defined or expanded the options to make it clearer and more specific. For instance, for the 1st question, it might be better if I changed the score 1-5 to the 5 measurements: 'completely confused', 'vague', 'understand part of it', 'understand most of the rules', 'completely understand'.

In terms of the three qualitative questions, question 4 was related to question 3 in order to get the underlying reason for why or why is it not interesting. As I mentioned above, the suggestion of improving the interestingness of the game was the key feedback that I wanted to get from the survey. And facts have proved that I did get some useful suggestions from this question.

The 5th and 6th question is a group, which aimed to find out and address the potential problems of the game. It was like inviting the interviewees to brainstorm for me and helping me discover the current design defects as well as the possible future challenges.

Overall, as the sample (number of interviewees) is not that big, the simple and concise quantitative questions were not as useful as the qualitative questions. I thus planned to put more qualitative questions in the next survey to get more suggestive feedbacks.

1.Can you understand the basic rules of the game? (1-5) ----------------- Multiple Choice

2.Do I communicate the design rationale clear enough? (1-5) ----------------- Multiple Choice

3.Do you think it's an interesting game? (1-5) ----------------- Multiple Choice

4.Why or why is it not interesting? ---------------- Text Question

5.Can you foresee any problems of the game? (e.g. rules, interactions, etc) ---------------- Text Question

6.Do you have any suggestions to refine the game to address the potential problems that you concern? ---------------- Text Question

7.What do you think of the quality of the video? (1-5) ----------------- Multiple Choice

8.What do you think of the quality of the audio? (1-5) ----------------- Multiple Choice

The type of study we did on the class was A/B testing and survey, but, unfortunately, I missed the A/B testing part. Therefore, what I really did was just an online survey by inviting the class to watch my video.

The purpose of the question 1 was the clarity of the game rules. It's pretty straightforward to get the feedback of whether the basic rules are communicated well and understood by the audience. From the feedback, I'll have a general idea of whether the game rules was clear or not.

The purpose of the question 2 was the clarity of the design rationale of the concept, which was very similar to the first one.

The 3rd question aimed to know if it was a fun game. It was one of the most important questions in this survey because it could significantly affect the iteration in the future. I also used a multiple choice to get an average score of the interestingness of the game so that I'll have a rough idea of the current fun level of the game. Besides, I didn't define 'fun' in the question, it should be broken down to several more specific questions that cover all the aspects of the definition of 'fun'.

The 7th and the last questions were asked to get rough scores of the quality of the audio and video of the prototype. I made the two questions hastily and forgot to put a text comment option under each question. What I found was that just scores, without comments, were nearly pointless of helping me improve the video-making skills, it could only be an encouragement or a pain.

All these 5 quantitative questions had the same problem that the options are too vague. I could have defined or expanded the options to make it clearer and more specific. For instance, for the 1st question, it might be better if I changed the score 1-5 to the 5 measurements: 'completely confused', 'vague', 'understand part of it', 'understand most of the rules', 'completely understand'.

In terms of the three qualitative questions, question 4 was related to question 3 in order to get the underlying reason for why or why is it not interesting. As I mentioned above, the suggestion of improving the interestingness of the game was the key feedback that I wanted to get from the survey. And facts have proved that I did get some useful suggestions from this question.

The 5th and 6th question is a group, which aimed to find out and address the potential problems of the game. It was like inviting the interviewees to brainstorm for me and helping me discover the current design defects as well as the possible future challenges.

Overall, as the sample (number of interviewees) is not that big, the simple and concise quantitative questions were not as useful as the qualitative questions. I thus planned to put more qualitative questions in the next survey to get more suggestive feedbacks.

2015年9月6日星期日

WK6 Coding Game in Actionscript 3.0

Overview

I'm thinking of modifying the current shooting control mechanism of the Space Invader game.

The new control will be the status of the squares displayed on the stage and each square is connected to a specific key-down event. For instance, there are 6 squares altogether and correspond to key 1-6 respectively. Some of the squares will randomly change the status to 'activate' (in the form of switching the background color of the squares) and the user needs to press the corresponding keys to shoot.

Object: Alien

Attributes (variables):

- shape

- x position

- y position

- speed

- status

- movement direction

Abilities (functions):

- move down

- move left

- move right

- shooting

- disappear (destroyed)

Collaborators (other objects):

- stage

- score

- bullet

- sound

Object: Laser Cannon

Attributes (variables):

- shape

- x position

- y position

- speed

- status

- times been shooted

- movement direction

Abilities (functions):

- move left

- move right

- shooting

- reset (to the start position)

- disappear (destroyed)

Collaborators (other objects):

- stage

- score

- bullet

- sound

Object: Bullet

Attributes (variables):

- shape

- x position

- y position

- speed

- status

- movement direction

Abilities (functions):

- move down

- move up

- disappear (crashed)

Collaborators (other objects):

- laser cannon

- alien

- stage

Object: Score

Attributes (variables):

- shape

- score board x position

- score board y position

- text x position

- text y position

- counter

- text value

Abilities (functions):

- accumulate score

- decrease score

- clear score

Collaborators (other objects):

- laser cannon

- alien

- stage

Object: Stage

Attributes (variables):

- size

- colour

Abilities (functions):

- display alien movement

- display alien disappear

- display cannon movement

- display cannon disappear

- play sound

- display shooting

- display score

Collaborators (other objects):

- laser cannon

- alien

- audio

- bullet

- twister board

- score

- shooting control

Object: Twister board

Attributes (variables):

- size

- shape

- x position

- y position

- colour

- status

- switching colour frequency

- index of the squares

Abilities (functions):

- switch colour (light up and down)

Collaborators (other objects):

- stage

- shooting

Object: Shooting

Attributes (variables):

- random quantity of the squares that will light up

- random index of the squares that will light up

- key code (corresponding to the key-down event)

Abilities (functions):

- decide which keys control shooting

Collaborators (other objects):

- stage

- twister board

Object: Audio

Attributes (variables):

- status

- name

- URL

Abilities (functions):

- load audio

- play audio

Collaborators (other objects):

- stage

- laser cannon

- alien

订阅:

博文 (Atom)